Design user evaluation of initial framework design

Contents

Evaluation Approaches

The following sections explain different approaches to evaluate the initial framework design.

The most preferred approaches currently are the group interview in combination with instrumenting the user selection of features. This is because the other approaches require testing the users with artificial scenarios that can be compared across the system and the baseline, which requires quite a bit of effort for the users and quite a bit of effort for the evaluators as well.

- @Felix_MIchel: This makes sense to me. I edited the page a bit to clarify the different evaluation techniques. -- -- Yolanda, 25 Aug 2014

- Great, then we'll use this to structure the evaluation section of the paper -- -- Felix, 29 Aug 2014

- @Felix_MIchel: How long before the instrumentation of features is implemented? Are we on track for Aug 31 as the task target date? -- -- Yolanda, 25 Aug 2014

- Yes, this has now been completed -- -- Felix, 29 Aug 2014

Group interview

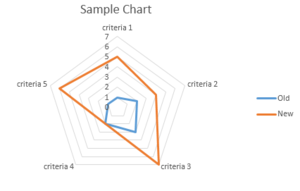

After the new version is completely rolled out, the main users are interviewed in a group interview. The basic idea behind the group interview is to bring all users together and discuss the value of every feature (or defined criteria) until they agree on one certain value collaboratively. The participants are asked to evaluate the old version and the new version. A spider diagram can be used to illustrate the difference between the old version and the new version. The figure below shows an example of a spider diagram.

- @Felix_MIchel: Would be good to include here a picture of a spider diagram. -- -- Yolanda, 25 Aug 2014

- Done, see above diagram -- -- Felix, 29 Aug 2014

Comparison with a baseline

Comparing the system with a baseline system is helpful to show the overall improvement. Each user would use the system and the baseline system, each with an artificial scenario. The two scenarios need to be designed to have comparative difficulty. In each condition, the time to accomplish the scenario is measured. Each user gets to use the system and the baseline in different order and with different scenarios. This accounts for both training effects (the user learns something from using the first system, which can improve the performance when using the second system) and individual differences (a term in psychology that refers to how the results of an experiment can be chalked to how a particular person approached the task.)

Comparison with an ablated version of the system

This is a special case of comparison with a baseline. An ablated version of the system removes features for a certain time or user group or both. This approach allows evaluating selected features in the system. The ablated version is a baseline system, and is the most comparable baseline system possible.

Instrument the user's selection of a feature

A given feature is instrumented by hiding it until the user asks for this feature. The new feature is replaced by simple boxes where users need to click to activate them. With this approach one can ensure that the user who enables a feature really wants to use it. With this evaluation approach, one measures how often specific features are used by different users.

Tracking tools

Most approaches need to track the user behavior therefore different tools can be used. Currently the site is tracked with the [Metrica] tool. The new features are implemented in Java Script which modifies the page content massively, and this limits in some ways the use of most tracking tools. Therefore we have implemented also our own Java Script tracker which is catching all user events directly within the code.