Write about the evaluation

From Organic Data Science Framework

Possible dimensions for evaluation:

- show how easy it is for new people to participate. For example, show total training time, show how often they go back to consult documentation, show how often they delete things they have created because they made mistakes, etc. Could also do a survey of new users. New users will include: Jordan, Craig, Hilary, Gopal (anyone else?).

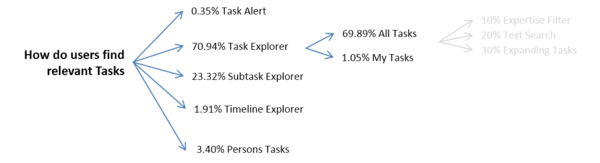

- show how people find relevant tasks. For example, show how they use search, how they get to tasks they want to do, etc.

- show how people track their own tasks. Here we would do a survey to ask how easy it is for people to track tasks.

- Survey with categories 1 to 10:

- My Task Tab

- Task Alert

- Person Page

- Expertise

- Survey with categories 1 to 10:

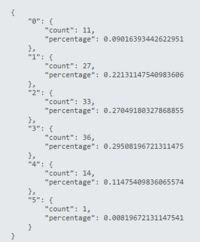

Need to show collaboration touch points:

- is more than one person signed up to each task?

Nr of Participants Tasks in % 0 0 1 10 2 25 3 50 4 10 >4 5

- is more than one person editing the metadata of tasks?

Nr of Meta Data Editors Tasks in % 1 10 2 25 3 50 4 10 >4 5

- Data Source: Tracking Data.

- is more than one person editing the content text of tasks?

Nr of Content Editors Tasks in % 1 10 2 25 3 50 4 10 >4 5

- Data Source: Wiki Logs.

- is person collaborating with more than one other person?

Person is collaborating with ... other persons. Persons % 1 10 2 25 3 50 4 10 >4 5

- Data Source: JSON Serialized Tasks.